Open-source for PhD students

Contributing to open-source is for everyone, especially PhD students!

As a PhD student moving to a new city at the height of the COVID-19 pandemic, remote schooling has inadvertently put a strain on learning and networking. However, this also presented an opportunity to be immersed in an environment that thrived in the online format: open-source. My current involvement with the community of PyMC, a Python library for Bayesian modeling, started from being curious and bored during lockdown and was catalyzed by stumbling across an opportunity to contribute to the codebase via Google Summer of Code (GSoC). Now, I am working part-time for PyMC Labs and I will start my second summer as a GSoC student and take the time to learn more about Dirichlet Processes (DP), Aesara and AePPL under the mentorship of Ricardo Vieira and Brandon Willard. Above all, I feel happy. Here is my journey so far.

February 2020: Pre-Pandemic

My last 5 years at a student at McGill University were amazing. I met incredible people and I really enjoyed learning in this environment: Montreal’s liveliness, my part-time job as a campus tour guide and lots of statistical theory. Above all, I spent countless hours in the most beautiful building on our campus: Burnside Hall.

This statement is unironic despite its exterior concrete-slab look of a Minecraft dungeon. On the inside, the walls were no different but, despite its underwhelming appearance, there was something that energized me on a daily basis: a vibrant community. No matter the position of the sun, there were always people around to talk about math, programming or statistics or to vent with when school or life becomes stressful. As the pandemic hit on Thursday, March 13, 2020, I knew that it would take a while before I can be surrounded by the same concrete and people again.

July 2020: Beta Variant and DP Summer School

The first pandemic summer was not terrible as I spent most of my time finishing my Master’s thesis and mentally preparing for my upcoming PhD studies. I was skateboarding more and eating far too much fruit yogurt, just because I could. I missed my biostat and Burnside friends very much, but we were still able to keep touch via Zoom.

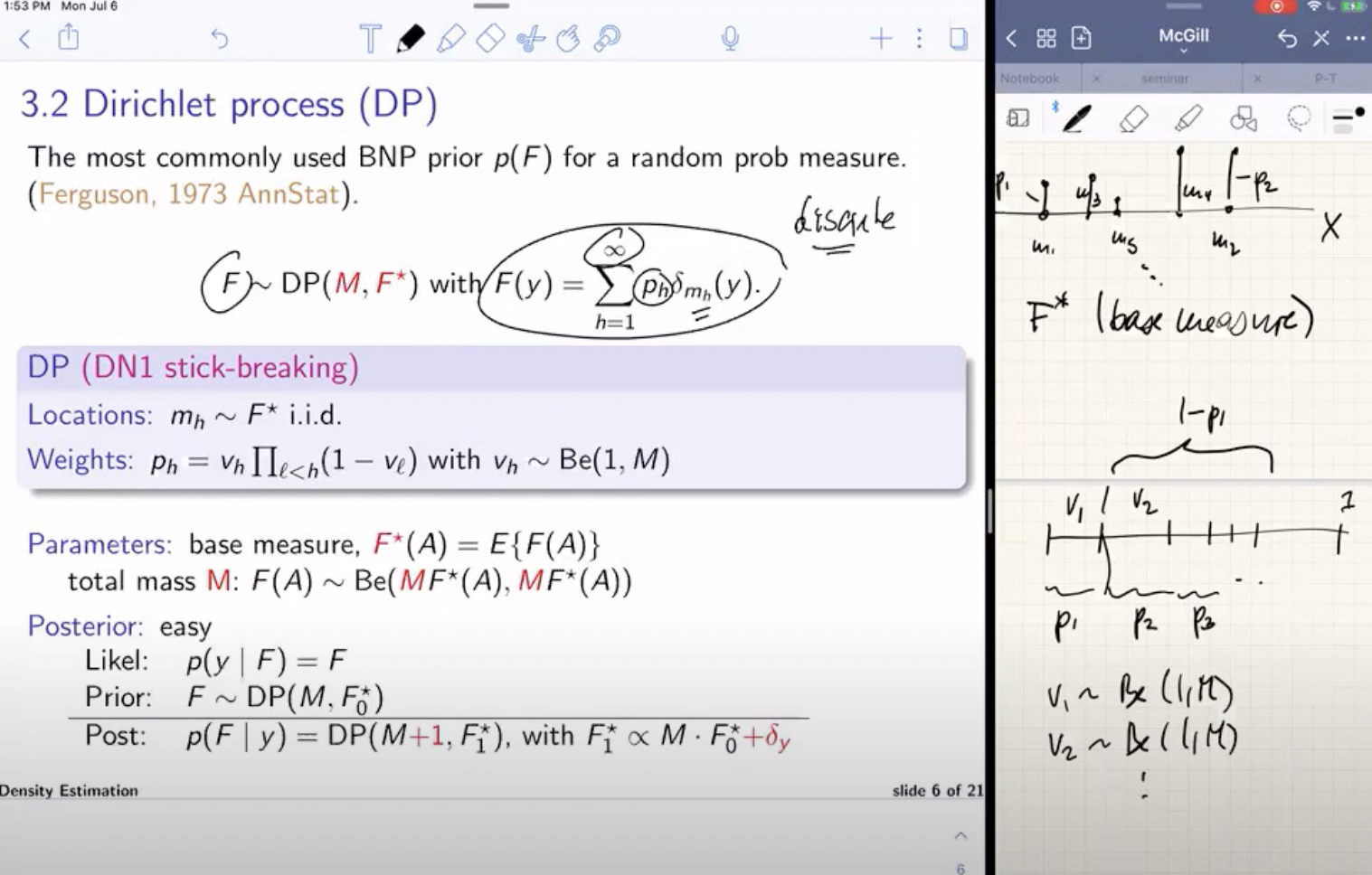

Dr. Alexandra Schmidt, a Bayesian professor at McGill’s EBOH department, was a big inspiration of mine for learning about Bayesian statistics. That summer, she organized a workshop on Dirichlet Processes taught by Dr. Peter Mueller, a well-established researcher in that area. Admittedly, I did not understand anything beyond the first 20 minutes of the first lecture but I found the whole paradigm very fascinating. Little did I know that this exposure to such a non-parametric method was the beginning of something much bigger for me.

September 2020: The Beginning of the PhD

It sucked.

I was welcomed to our new school via a Zoom call as I sat in my parents’ basement, a few days after submitting my Master’s thesis which was a few days after a remote break up. I knew that the school year would be a long one. I must thank Dr. Anna Heath with whom I did a year-long project on Bayesian analysis of clinical trials for her guidance and moral support for keeping me afloat during the difficult schoolyear.

April 2021: Applying to GSoC

After my winter semester, my constant efforts to socially engage with my cohort via Zoom and the ongoing academic workload was starting to tire me. With our three-part comprehensive exams scheduled for August in sight, I knew that I needed change. Regardless of the outcome of my theory exams, I had a strong urge to devote my energy and eagerness to do something else. One day in March, as I was browsing my Twitter feed, I came across the following Tweet.

To be frank, I thought that the PyMC project was previously in a rough spot because it relied on Theano, a tensor library which was no longer being supported. I knew that this would be an exciting opportunity for the summer as it would complement well my preparation for my highly theoretical and painful PhD comprehensive exams.

Among the listed projects, there was one that immediately caught my eye: “Implementing a Truncated Dirichlet Process Functionality to PyMC3”. It reminded me of the 2020 DP Summer School where I did not understand anything about this fancy statistical model. Unknowingly aware of how difficult the learning curve ahead of me would be, I applied for this project and am happy that I did.

PyMC: An Elevator Speech

For the uninitiated, here is a summary of the PyMC framework. For the initiated, you are still welcome to read this part and criticize my summary below.

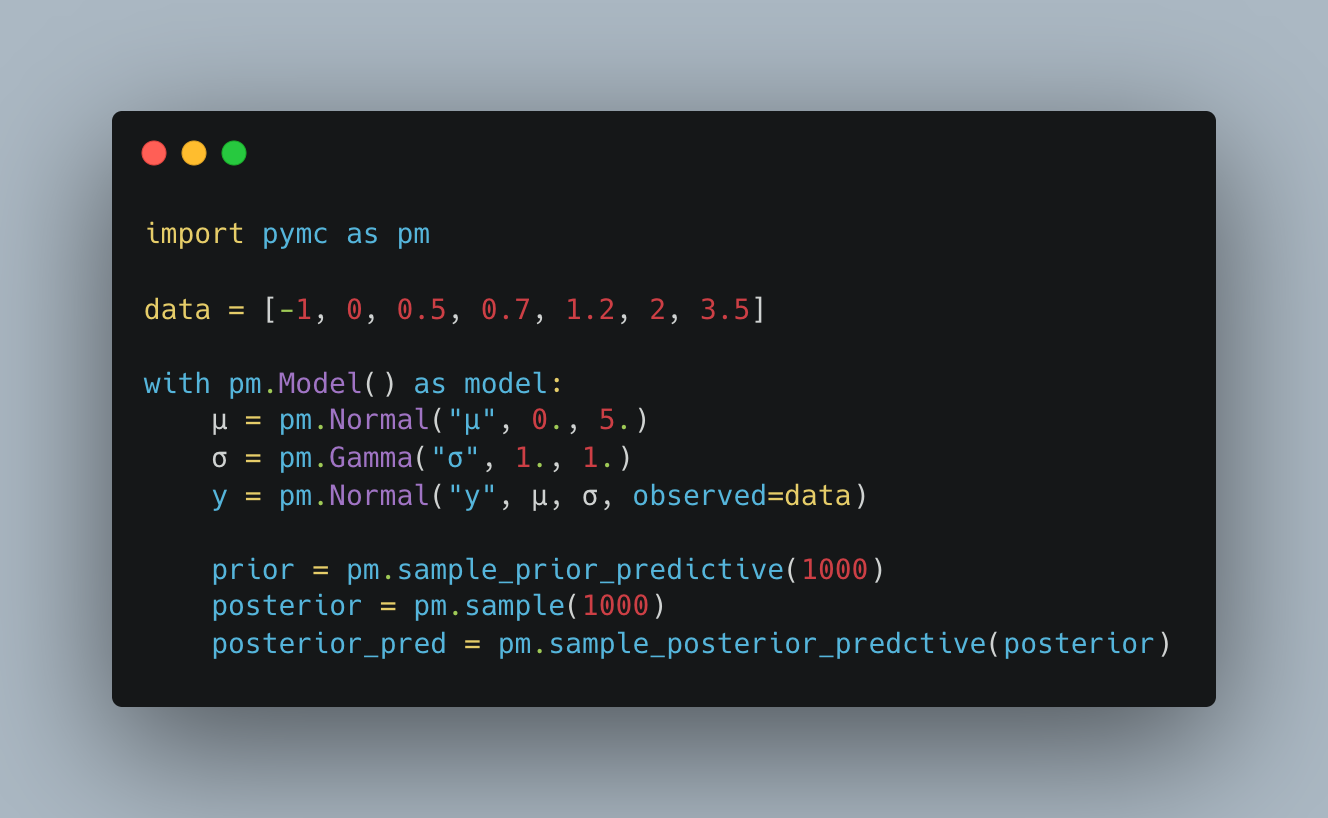

PyMC is a probabilistic programming package for fitting Bayesian models. The previous major version is PyMC3 which relied on a tensor library called Theano; the newest major release, PyMC or PyMCv4.0, is now built on Aesara, a Python library heavily inspired by Theano for symbolic computation. Under a pm.Model() context manager, users are able to define their statistical model. Random variables can be observed or not and a key advantage of PyMC is that posterior sampling, which is of key interest in the Bayesian statistical paradigm, can be done automatically and efficiently.

For instance, here is the conventional model for normally distributed data with conjugate priors:

\[\begin{align*} y \, | \, \mu, \sigma^2 \stackrel{\text{i.i.d.}}{\sim} &\mathcal{N}(\mu, \sigma^2)\\ \mu \sim &\mathcal{N}(0, 5)\\ \sigma \sim &\text{Gamma}(1, 1) \, . \end{align*}\]Given that the Python object data is the observed data, the PyMC model would be written as followed with prior, posterior and posterior predictive sampling:

Summer 2021: Delta Variant and GSoC

Marginally, the 2021 summer was alright. Conditioned on GSoC, it was great; conditioned on everything else, it was quite underwhelming.

On the personal side of things, I moved out of the apartment where I stayed in Montreal for my first year as a PhD student despite being enrolled in the University of Toronto. Around July, I finally moved to Toronto, but the pandemic was still around especially with the fear of the Delta variant rising. As our department was getting ready for a remote fall semester, I was studying for my theoretical exams, mostly alone, as my whole year of coursework was not only stressful but borderline useless for these exams (outside of the course in Survival analysis). Thankfully, I received a solid foundation in statistical theory from my Masters at McGill and I felt confident that I can do relatively well on these exams all while immersing myself into the PyMC codebase. I barely knew anyone when moving to Toronto, so I went skateboarding almost every day where it did not rain. As a result of overexercising, I sprained my ankle on July 17, 2022.

On the flip side of the coin, GSoC was definitely a highlight of the summer. I am very grateful for my mentors Chris Fonnesbeck and Austin Rochford who guided me through my first steps into the open-source world of PyMC. The learning curve was steep, as Dirichlet Processes are a theoretically challenging topic; I started with conducting simulation studies, managing Git branches and getting acquainted with the Distribution and RandomVariable structure that underpins Aesara and PyMC. None of these tasks actually entailed creating the submodule, but these intermediate learning steps were crucial. Above all, through this experience, I was able to interact with a community of people from various countries and, despite the technical skills that I have acquired, the implicit networking that I have done through contributing to open-source was invaluable.

September 2021: Still Online…

I passed my theory exams for my PhD program and my involvement with PyMC continued. As I started creating more issues and pull requests, I was learning a lot by doing and my confidence grew. My interactions with members of the PyMC community were always positive. Effectively, I was learning about a highly specialized technical topic, (probabilistic) programming, and establishing connections with passionated individuals; these were feelings that I was expecting from my PhD studies which were highly impacted by the global pandemic.

Winter 2021-2022: Omicron Variant, Finding Support

As winter and the Omicron variant came, more lockdowns were issued in Canada and restrictions were strengthened during the holidays. We remained online for the fourth consecutive term of my studies and the future was getting bleak on many levels. However, this is when I realized that the PyMC community has many members who had pursued a PhD degree and perhaps experienced some of the confusion that I was feeling, although outside of a pandemic. I figured why not reach out to them, for some support and perhaps some advice about my studies and prospective professional career. Below are four tips from community members that were generously shared with me.

Tip #1: It’s important to know how to stop.

As graduate students, we are often just working, working and working. The endless effort put into our thesis or academic development may stem from our ambition or lack of other productive things to do. Regardless, striking a proper work-life balance is important and we are seldom taught on how to properly take breaks. This was effectively the case for me as I knew very well how to dedicate time to my projects and partake in more. It was a gradual yet important realization that, as much as I enjoy my studies, programming and statistics, it is equally or even more important to take breaks.

Tip #2: No skipping steps

Dirichlet processes are primarily used within mixture models (DP Mixture) to model multi-modal data, that is data whose distribution has several peaks. To create some pymc.DirichletProcessMixture functionality, I need to think about the API for a general DP object. Before even thinking about how to design a general DP API for PyMC, I need to know DPs, which are fairly daunting in theory. From a statistical theory perspective, I need to know:

- what they are by definition;

- why they can be used as priors for random probability measures;

- the many representations of a DP;

- how to conduct a DP simulation study in NumPy.

From a programming perspective, I need to learn how to:

- manage branches via Git;

- create pull requests and rebase them;

- create a custom distribution class in PyMC;

- how to debug Aesara.

All in all, the creation of a DP submodule requires that I go through the many learning steps listed above and none should nor can be skipped. The slow realization of these sequential objectives overcomes the imposter syndrome that I felt as I dove head first into this project.

Tip #3: Be confident!

While this advice may sound generic, it’s always nice to hear it from someone else, especially when stuck on something daunting, e.g. job hunting, debugging code, facing set-backs, etc. As graduate students, the process of spending several years writing a single document can be exhausting as there is always something more to work on (see tip #1). We are also often blinded by the work that needs to be done and the hurdles that we encounter as we fail to be proud of how far we have come in our studies. Building confidence is difficult yet important and, no matter the challenges we encounter, there is always reason to be proud of ourselves.

Tip #4: Know how much you are worth. Highly compensated yet fun work is available.

For graduate students, the thesis is the ticket to graduation and any other non-academic work can be a distraction from this precious ticket. An inadvertent consequence is that we often do not realize the value that we could bring in the workforce nor the out-of-academia jobs that would satisfy the desire to tacke scientifically advanced or innovative problems. In short, there are fun jobs that pay well as graduate education in a highly technical area is worth a lot (who would have known??).

Summer 2022 and Beyond

While PyMC permits the use of truncated DP Mixtures, a nice API is yet to be made available. This is a work-in-progress and it is one of the objectives of my 2022 Google Summer of Code project. As mentioned in the first paragraph of this post, I will be diving deeper into Aesara and AePPL to better understand the computational engine of PyMC while having the DP submodule as the final end product. I will visit my former department at the University of Bordeaux and attend PyData London in mid-June. For the rest of the summer, a good portion will be devoted to relaxing, coding and enjoying some time off from my studies. I must give very special thanks to my amazing PhD advisors, Dr. Olli Saarela and Dr. Eleanor Pullenayegum, for their patience, guidance and support throughout my doctoral studies so far.

For the future, I hope to stay involved within the PyMC community. It is becoming more and more apparent that the future for developing statistical software is bright, i.e. full of job opportunities. With a DP functionality available, an interesting avenue to pursue would be to create a Bayesian non-parametric toolbox as Gaussian Processes and Bayesian Additive Regression Trees (BART) are available with in PyMC. As for my PhD, I am looking forward to finally being in-person, mingling with my peers and continuing my research in dynamic treatment regimes.